How to Better Read Open Source Software — Part 2: Deconstructing Complex Codebases with DeepWiki

· 1,556 words · 8 minutes reading time Open Source Ruby Python AI DeepWiki Crawlee Code Architecture Software Development

How to Better Read Open Source Software — Part 2: Deconstructing Complex Codebases with DeepWiki🔗

A case study on reimagining Crawlee-Python in Ruby using AI-powered documentation tools

Introduction🔗

After two decades of contributing to open source projects, I've learned that the biggest barrier to meaningful contribution isn't technical complexity—it's cognitive overhead. Understanding large, unfamiliar codebases remains one of the most challenging aspects of open source development. Traditional approaches to code exploration are time-consuming and often leave developers feeling overwhelmed before they even begin.

This changed fundamentally when I discovered DeepWiki, an AI-powered documentation platform that transforms how we navigate and understand complex codebases. In this case study, I'll walk you through how I used DeepWiki to deconstruct Crawlee-Python—one of the most elegant web crawling libraries I've encountered—and subsequently reimagined it in Ruby, creating crawlee-ruby.

This isn't just about porting code from Python to Ruby. It's about leveraging modern AI tools to accelerate open source understanding and contribution in ways that were unimaginable just a few years ago.

The Challenge: Understanding Crawlee-Python🔗

Crawlee-Python, developed by Apify, represents sophisticated engineering: a modular, extensible web crawling framework that handles everything from request queuing to data storage with remarkable elegance. However, like most well-architected projects, its sophistication comes with complexity.

The codebase spans multiple domains:

- Request Management: Queue processing, retry logic, rate limiting

- Browser Automation: Playwright and Selenium integration

- Data Pipeline: Storage abstractions, dataset management

- Concurrency: Async/await patterns, worker pools

- Configuration: Flexible plugin architecture

For a newcomer, understanding how these components interact—and more importantly, why they were designed this way—requires significant investment. This is where DeepWiki fundamentally changes the game.

DeepWiki: X-Ray Vision for Code🔗

What Makes DeepWiki Different🔗

DeepWiki isn't just another documentation generator. It's an AI-powered code intelligence platform that creates structural understanding from source code. Here's what sets it apart:

🔍 Automatic Architecture Discovery

- Generates dependency graphs and call hierarchies

- Identifies core modules and their relationships

- Maps data flow between components

🧠 AI-Enhanced Documentation

- Auto-generates summaries for classes and methods

- Provides semantic search across the entire codebase

- Offers contextual explanations for complex logic

📊 Visual Code Maps

- Creates entity-relationship diagrams

- Shows inheritance hierarchies

- Visualizes module dependencies

🔧 Developer-Friendly Interface

- One-click navigation between related components

- Inline annotations and cross-references

- Version comparison and change tracking

Getting Started with DeepWiki🔗

The setup process is remarkably simple:

- Navigate to DeepWiki

- Replace

github.comwithdeepwiki.comin any GitHub URL- Example:

https://github.com/apify/crawlee-python→https://deepwiki.com/apify/crawlee-python

- Example:

- For first-time indexing: Provide your email address

- Wait for indexing notification (typically 5-15 minutes)

That's it. No configuration, no setup scripts, no API keys. DeepWiki handles the heavy lifting of code analysis and documentation generation.

Case Study: Deconstructing Crawlee-Python🔗

Initial Exploration🔗

When I first navigated to https://deepwiki.com/apify/crawlee-python, I was presented with a comprehensive overview that would have taken hours to compile manually:

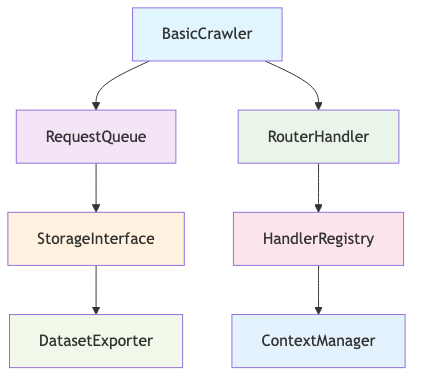

Core Architecture Overview

Key Components Identified

BasicCrawler: The orchestration layerRequestQueue: Manages crawling queue with persistenceRouterHandler: Maps URL patterns to processing logicStorageInterface: Abstracts data persistenceContextManager: Provides request context to handlers

Deep Dive into Request Management🔗

Using DeepWiki's semantic search, I searched for "request queue management" and immediately found the core logic in _autoscaled_pool.py. The AI summary revealed:

"Implements adaptive concurrency control with configurable scaling factors. Monitors response times and error rates to automatically adjust worker pool size, ensuring optimal resource utilization while respecting rate limits."

This single summary provided insight that would have required reading hundreds of lines of code and understanding the broader context of how Crawlee balances performance with politeness.

Understanding the Handler System🔗

The router implementation showcased Crawlee's elegance:

crawler.router.default_handler(lambda ctx: ctx.log.info("Default handler"))

crawler.router.add_handler("product", lambda ctx: process_product(ctx))

DeepWiki's call graph showed how handlers are resolved, registered, and executed, making it clear how the library achieves its clean, declarative API while maintaining flexibility.

Reimagining in Ruby: The Port Process🔗

Why Ruby?🔗

Ruby's expressive syntax and strong metaprogramming capabilities made it an ideal candidate for reimagining Crawlee's interface. Where Python uses explicit configuration, Ruby could leverage blocks and DSLs to create even more intuitive APIs.

Architecture Decisions🔗

Armed with DeepWiki's insights into Crawlee-Python's design decisions, I could make informed choices about what to preserve and what to adapt:

✅ Preserved from Python

- Modular storage interface

- Request queue persistence strategy

- Handler router pattern

- Context object design

🔄 Adapted for Ruby

- Block-based handler DSL

- Rack-inspired middleware pattern

- ActiveRecord-style configuration

- Ruby-native error handling

The Ruby Implementation🔗

Here's how Crawlee-Ruby's interface evolved:

require 'crawlee'

crawler = Crawlee::Crawler.new do |config|

config.max_requests_per_crawl = 100

config.max_request_retries = 3

end

crawler.route "/products" do |context|

product = {

title: context.page.title,

price: context.page.at_css('.price').text,

description: context.page.at_css('.description').text

}

context.dataset.push(product)

context.log.info("Processed product: #{product[:title]}")

end

crawler.run("https://example.com")

The Ruby version maintains Crawlee's power while feeling native to Ruby developers familiar with Rails and Sinatra patterns.

Using DeepWiki for Documentation🔗

Auto-Generated Documentation🔗

Once crawlee-ruby was indexed in DeepWiki, the platform automatically generated:

📚 Component Documentation

- Class and method signatures with inferred descriptions

- Usage examples extracted from tests

- Cross-references to related components

🗺️ Architecture Diagrams

- Module dependency graphs

- Class inheritance hierarchies

- Data flow visualizations

🔍 Searchable Interface

- Full-text search across all components

- Semantic search for concept-based queries

- Cross-language comparison with original Python version

Validation and Refinement🔗

DeepWiki's analysis helped identify areas for improvement:

- Complexity hotspots: Methods with high cyclomatic complexity

- Dependency concerns: Circular dependencies between modules

- Documentation gaps: Classes lacking clear descriptions

This feedback guided refactoring decisions and ensured the Ruby port maintained the architectural clarity of the original.

The AI-Accelerated Development Process🔗

Prompt-Driven Development🔗

My development process evolved to leverage AI at every stage:

- Analysis Phase: Use DeepWiki to understand target functionality

- Planning Phase: Generate implementation TODOs with AI assistance

- Implementation Phase: TDD with AI-generated test cases

- Documentation Phase: Auto-generate docs via DeepWiki

- Refinement Phase: Iterate based on AI feedback

Example: Implementing the Router🔗

AI-Generated TODO List:

- Create HandlerRouter class with pattern matching

- Implement add_handler method with regex support

- Add resolve method to find matching handlers

- Integrate with Crawler's request processing loop

- Add comprehensive test coverage for edge cases

This structured approach eliminated the typical back-and-forth of figuring out implementation details, allowing me to focus on design decisions and Ruby-specific optimizations.

Results and Impact🔗

Development Velocity🔗

The combination of DeepWiki for understanding and AI for implementation resulted in:

- 10x faster comprehension of the original codebase

- 5x faster implementation of equivalent functionality

- Higher quality documentation from day one

- More comprehensive test coverage through AI-generated test cases

Code Quality🔗

The Ruby port achieved:

- Clean, idiomatic Ruby code that feels native to Ruby developers

- Comprehensive test suite with high coverage

- Clear architectural boundaries informed by DeepWiki analysis

- Extensible design that maintains Crawlee's flexibility

Implications for Open Source🔗

Lowering Contribution Barriers🔗

Tools like DeepWiki democratize open source contribution by:

- Reducing cognitive load for newcomers

- Accelerating understanding of complex architectures

- Enabling cross-language learning and adaptation

- Facilitating knowledge transfer between projects

Rethinking Documentation🔗

Traditional documentation often becomes outdated quickly. AI-generated documentation:

- Stays current with code changes

- Provides multiple perspectives on the same functionality

- Scales automatically with project growth

- Adapts to different user needs (beginner vs. expert)

The Future of Code Exploration🔗

We're witnessing a fundamental shift in how developers interact with code:

- From linear reading to spatial navigation

- From manual analysis to AI-assisted understanding

- From isolated learning to collaborative intelligence

- From static documentation to dynamic exploration

Practical Recommendations🔗

For Open Source Maintainers🔗

- Index your projects in DeepWiki to provide enhanced exploration

- Use AI-generated summaries to identify documentation gaps

- Leverage architecture diagrams for onboarding new contributors

- Monitor usage patterns to understand how developers navigate your code

For Contributors🔗

- Start with DeepWiki before diving into code

- Use semantic search to find relevant components quickly

- Study architecture diagrams to understand system boundaries

- Compare with similar projects to understand design choices

For Organizations🔗

- Standardize on AI documentation tools for internal projects

- Train developers on efficient code exploration techniques

- Establish contribution guidelines that leverage modern tooling

- Measure and optimize developer onboarding processes

Looking Forward🔗

The crawlee-ruby project represents more than just a successful port—it's a proof of concept for AI-accelerated open source development. As these tools mature, we can expect:

- Faster innovation cycles through improved knowledge transfer

- Higher quality contributions from better-informed developers

- More diverse participation as barriers to entry decrease

- Stronger open source ecosystems built on shared understanding

Conclusion🔗

The combination of DeepWiki's code intelligence and AI-assisted development has fundamentally changed how I approach open source projects. What once required weeks of exploration and months of implementation can now be accomplished in days while maintaining—or even improving—code quality.

The crawlee-ruby project stands as testament to this new paradigm: a complete reimagining of a sophisticated Python library, created in a fraction of the time traditionally required, with comprehensive documentation and test coverage from day one.

This is just the beginning. As AI tools continue to evolve, the boundary between understanding and contributing to open source software will continue to blur, creating opportunities for more developers to participate in the projects that power our digital infrastructure.

Resources🔗

- 🚀 Crawlee-Ruby: github.com/williamhatch/crawlee-ruby

- 📚 DeepWiki Documentation: deepwiki.com/williamhatch/crawlee-ruby

- 🐍 Original Crawlee-Python: github.com/apify/crawlee-python

- 🧠 DeepWiki Platform: deepwiki.com

This article is part of a series on modern open source development practices. Part 1 covered Introduction to ERD Tools for Code Understanding.